Test Drive: Whisper v3 Turbo

Published on .

Today I’m test driving mlx-whisper “OpenAI Whisper on Apple silicon with MLX and the Hugging Face Hub” since OpenAI just published Whisper v3 Turbo, which @andi_marafioti kindly converted to MLX format. I saw a tweet about 12x speedup and I have an M1 Pro, so I wanted to give it a try. Years ago I converted some East Boston Oral History cassette tapes to digital audio, so I figured I’d transcribe them.

The process will be:

- Install mlx-whisper

- Download model

- Transcribe

The code is simple:

import mlx_whisper

result = mlx_whisper.transcribe(

"/Users/ntaylor/conal_foley.mp3",

path_or_hf_repo="mlx-community/whisper-large-v3-turbo",

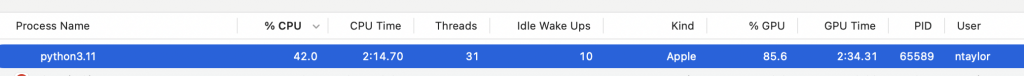

)In this screenshot you can see it brrrrr-ing away on my GPU.

The result is impressive. In just 4 minutes 9 seconds, it transcribed a 55 minute audio file into about 10,000 words, which is a 13x speedup. Wow!

I spot checked the quality and it is quite good, although it went crazy at the very end.

Now this is going on where? This is, oh, yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah. Yeah."

You can listen at https://nattaylor.com/eastboston/east-boston-oral-history/