Test Drive: Sambanova

Published on .

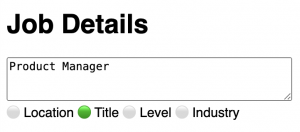

Today I’m test driving Sambanova, “the World’s Fastest AI Inference.” Inference is typically measured in tokens per second and Sambanova is 10X faster. I’ve been imagining free text inputs that do live validation of the results of extraction by an LLM. e.g. Imagine this textarea below for a user to input job details like “product manager in boston”.

The process is:

- Get an API key from Sambanova

- Write a prompt

- Call the model

Here’s my sample code, which runs in about 500ms. So… yes, you could do live validation. Neat!

from litellm import completion

from textwrap import dedent

response = completion(

model="sambanova/Meta-Llama-3.1-8B-Instruct",

messages=[

{"role": "system", "content": dedent("""\

Extract the job details as JSON with keys:

- location: str

- job_title: str

- level: Literal['junior', 'mid', 'senior']

Respond only with valid JSON. Do not write an introduction or summary.

""")},

{

"role": "user",

"content": dedent("""\

VP Product in boston

"""),

}

],

)

print(response.choices[0].message.content)

# {

# "location": "Boston",

# "job_title": "VP Product",

# "level": "senior"

# }