Test Drive: Gemma APS

Published on .

Today I’m test driving Gemma APS “models for text-to-propositions segmentation.” “Abstractive proposition segmentation” (aka claim extraction) is a new concept to me aimed at solving problems including fact-checking. Amazon’s RefChecker is another research here. The idea is break a passage down into simple, individual claims with minimal changes to the text so that they can be processed indepdently. The process is simple:

- Accept the Gemma terms and login to HuggingFace

- Implement :)

The output is a list of claims

I just used the implementation straight from Google

import nltk

import re

nltk.download('punkt')

start_marker = '<s>'

end_marker = '</s>'

separator = '\n'

def create_propositions_input(text: str) -> str:

input_sents = nltk.tokenize.sent_tokenize(text)

propositions_input = ''

for sent in input_sents:

propositions_input += f'{start_marker} ' + sent + f' {end_marker}{separator}'

propositions_input = propositions_input.strip(f'{separator}')

return propositions_input

def process_propositions_output(text):

pattern = re.compile(f'{re.escape(start_marker)}(.*?){re.escape(end_marker)}', re.DOTALL)

output_grouped_strs = re.findall(pattern, text)

predicted_grouped_propositions = []

for grouped_str in output_grouped_strs:

grouped_str = grouped_str.strip(separator)

props = [x[2:] for x in grouped_str.split(separator)]

predicted_grouped_propositions.append(props)

return predicted_grouped_propositions

from transformers import pipeline

import torch

generator = pipeline('text-generation', 'google/gemma-2b-aps-it', device_map='auto', torch_dtype=torch.bfloat16)

passage = 'Sarah Stage, 30, welcomed James Hunter into the world on Tuesday.\nThe baby boy weighed eight pounds seven ounces and was 22 inches long.'

messages = [{'role': 'user', 'content': create_propositions_input(passage)}]

output = generator(messages, max_new_tokens=4096, return_full_text=False)

result = process_propositions_output(output[0]['generated_text'])

print(result)

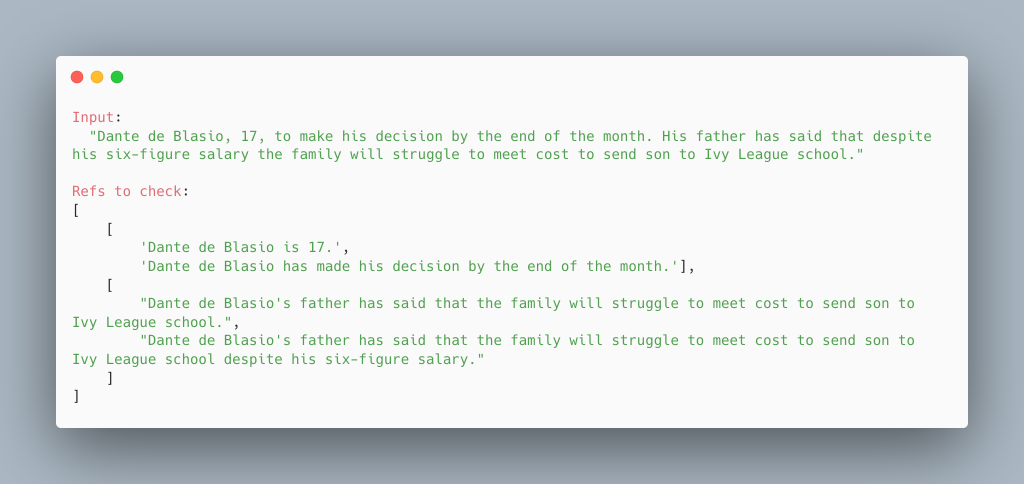

passage = 'Dante de Blasio, 17, to make his decision by the end of the month. His father has said that despite his six-figure salary the family will struggle to meet cost to send son to Ivy League school.'

messages = [{'role': 'user', 'content': create_propositions_input(passage)}]

output = generator(messages, max_new_tokens=4096, return_full_text=False)

result = process_propositions_output(output[0]['generated_text'])

print(result)