Test Drive: opik

Published on .

Today I test drove opik, “an end-to-end LLM evaluation platform designed to help AI developers test, ship, and continuously improve LLM-powered applications.” This was on my list to try anyways, but yesterday there was a reddit post about it and it’s similar to Langtrace, so today was the day! I’m going to get it running locally, do some traces and run an experiment.

The process involves:

- Installation via git clone, docker-compose and

opik configure --use_local - Instrument

- Evaluate

Installation was a breeze, except my out-of-date version of Docker prevented the backend app from running at first. You get a nice browser based UI once its running.

From there, I implemented their simple tracing like this:

import textwrap

from opik import track

from opik.integrations.openai import track_openai

openai_client = track_openai(OpenAI())

description = """PTT Public Company Limited is a Thailand-based company engaged in the gas and petroleum businesses. The Company supplies, transports and distributes natural gas vehicle (NGV), petroleum products and lubricating oil via service stations throughout Thailand and also exports to overseas markets. Through its subsidiaries and affiliated companies, the Company is involved in exploration, production, refinery, marketing and distribution of petroleum, petrochemical products and aromatics. In addition, the Company operates international trade businesses, including import and export of crude oil, condensates, petroleum products, petrochemicals, and sourcing of international transport vessels and carriers."""

@track

def classify(description):

completion = openai_client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": textwrap.dedent(f"""\

You will be given a description of a company.

Classify the company into an industry in json, with key

- industry: str

Return only the name of the industry, and nothing else.

Company Description: {description}""")}

],

response_format={ "type": "json_object" },

)

return completion.choices[0].message.content

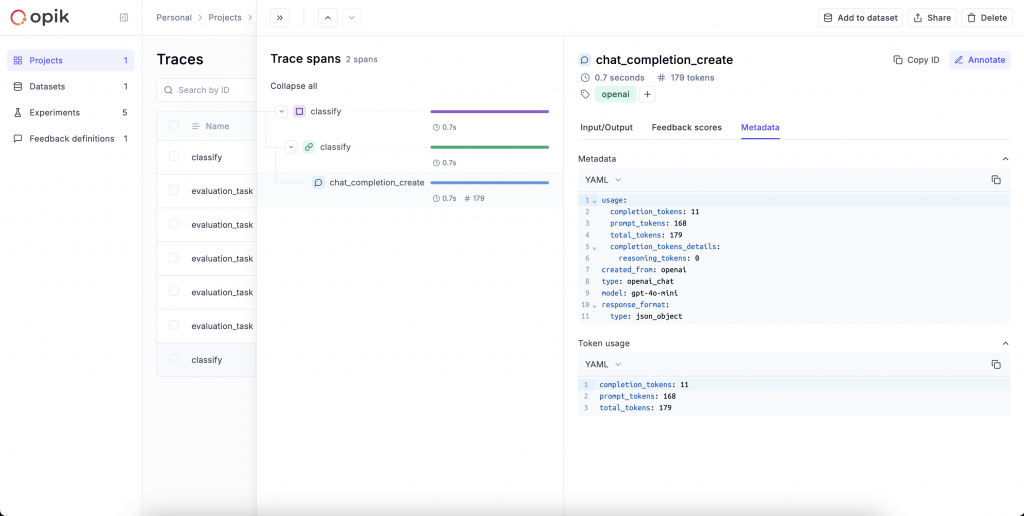

classify(description)From that, you get a terrific trace of your program

Next up, is an evaluation. My demo evaluation is just using their built-in IsJSON() metric and my dataset is just 2 toy examples.

import random

import textwrap

from opik import Opik

from opik.evaluation import evaluate

from opik.evaluation.metrics import IsJson

from opik.integrations.openai import track_openai

from openai import OpenAI

openai_client = track_openai(OpenAI())

dataset = Opik().get_dataset(name="Companies")

def evaluation_task(dataset_item):

completion = openai_client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": textwrap.dedent(f"""\

You will be given a description of a company.

Classify the company into an industry in json, with key

- industry: str

Return only the name of the industry, and nothing else.

Company Description: {dataset_item.input['description']}""")}

],

response_format={ "type": "json_object" },

)

return {

"input": dataset_item.input['description'],

"output": completion.choices[0].message.content if random.random() > 0.5 else 'foo'

}

metrics = [IsJson()]

eval_results = evaluate(

experiment_name="my_evaluation",

dataset=dataset,

task=evaluation_task,

scoring_metrics=metrics

)

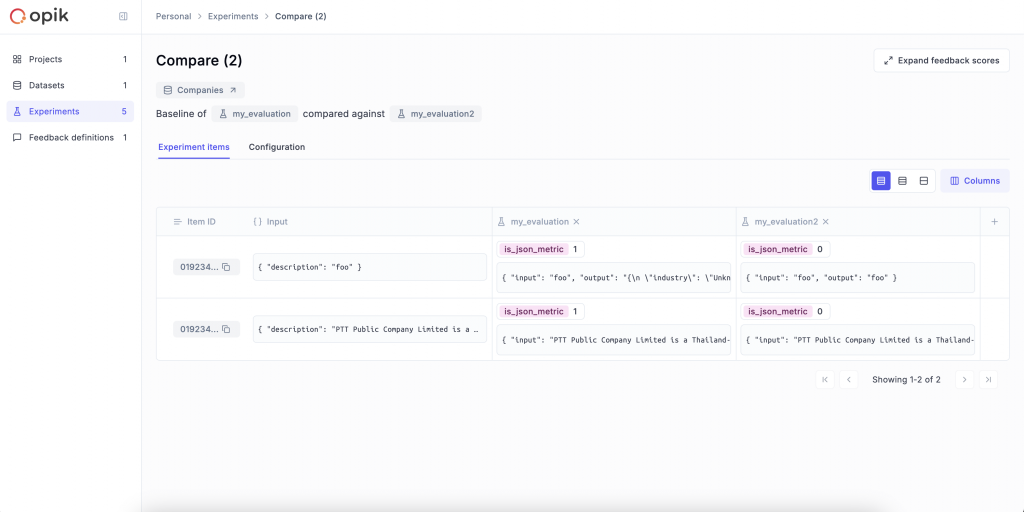

I found the experiment comparison view to be really insightful, even for my demo data.